Dear community! 🙏

The topic of AI is omnipresent.

Many are shouting loudly that it is now a bubble on the stock market and cannot withstand the high expectations in the short and medium term.

What is certain is that LLMs such as ChatGPT or Gemini have become an integral part of many people's lives.

Still far from "perfect", generative AI nevertheless exudes a surreal magic that we could not have imagined in our wildest dreams just five years ago.

Large companies, such as Accenture recently, are laying off staff on a massive scale as part of a major "AI restructuring".

It seems clear that AI will soon cause massive upheaval in business and society.

Last week, I received my pre-order of Eliezer Yudkowsky's new book on "superintelligent AI" and devoured it in two days.

Although this post is not directly about stock market investing, I think the topic is important enough to interest you.

Buckle up for a round of existential angst on Monday 😎✨

__________________________

❓What exactly is this about?

Yudkowsky, the author, is co-founder of the "Machine Intelligence Research Institute" in Berkeley and warned of the existential risks of advanced AI systems over 20 years ago.

The title "If Anyone Builds It, Everyone Dies" of his latest book initially sounds like a lurid exaggeration ...

Although I knew the basic thesis on the dangers of AGI (Artificial General Intelligence) in advance, I didn't think too much of it.

With billions and billions flowing into the sector, clever minds will surely think about security (AI alignment) ... right? 🤔

To anticipate the core message of the book:

Yudkowsky and co-author Nate Soares go so far as to say that if we continue to research the capabilities of artificial intelligence and train better and better models, it will undoubtedly lead to the certain demise of humanity.

__________________________

⬛Functionality of LLMs and black box reality:

We know surprisingly little about how LLMs work internally.

"Mechanistic Interpretability" is researching this, but a general, scalable understanding is lacking.

This is fundamentally due to the fact that LLMs are not "programmed", but "grow" analogous to biological evolution.

Here is a rudimentary explanation of how it works, but it is sufficient to illustrate the problem:

A transformer model consists of billions of parameters whose weights are initially set randomly.

During training, it is given tasks in the form of texts and attempts to predict the next word.

Based on the error between the prediction and the correct answer, gradient descent is used to calculate in which direction and to what extent each weight must be changed in order to improve the result.

This process is repeated over an unimaginable number of texts, with the weights constantly adapting.

In this way, the Transformer gradually "learns" language patterns, meaning and context until it can write coherently.

__________________________

😶🌫️Unsichtbare Preferences:

Systems that grow via gradient descent can learn goals that do not correspond to our intentions.

They optimize a training goal and learn internal heuristics or "values" in the process.

This learning dynamic gives rise to instrumental goals (securing resources, avoiding shutdown), which can collide with human goals.

This has already been empirically observed in AI models and supports the fear of hidden desires that only become visible outside of training.

The "alignment" is therefore, as things stand today, unsolvable.

__________________________

🚀Race Condition (States & Big Tech):

In my opinion, this is the most important driver for the known risks simply being ignored.

Who slows down first?

Capital and governments are pushing forward with compute, talent, power and chips.

The AI Index shows record investments, massive government programs and ever faster scaling on the frontier.

Here is a paraphrased passage from the book that illustrates the problem:

Several companies are climbing upward as if on a ladder in the dark.

Each rung brings enormous financial gains (10 billion, 50 billion, 250 billion USD, etc.). But no one knows where the ladder ends - and whoever reaches the top rung causes the ladder to explode and destroys everyone.

Nevertheless, no company wants to be left behind as long as the next rung is seemingly safe.

Some managers even believe that only they themselves can control the "explosion" and turn it into something positive - and therefore feel obliged to keep climbing.

The same dilemma also applies to states: No country wants to weaken its economy through strict AI regulation, while other countries continue their research unabated. Perhaps, so the thinking goes, the next step is even necessary to safeguard national security.

The problem could be solved more easily if science could determine exactly at what performance limit AI becomes truly dangerous. For example: "The fourth rung is deadly" or "Danger looms above 257,000 GPUs". But there is no such clear limit.

A potential, real "Tragedy of the Commons". 🤷♂️

In theory, LLMs could become arbitrarily "intelligent" as long as they have enough parameters, data and computing power.

This follows from the Church-Turing hypothesis: a sufficiently large neural network can approximate (with enough precision) any computable function, including an arbitrarily "intelligent" one.

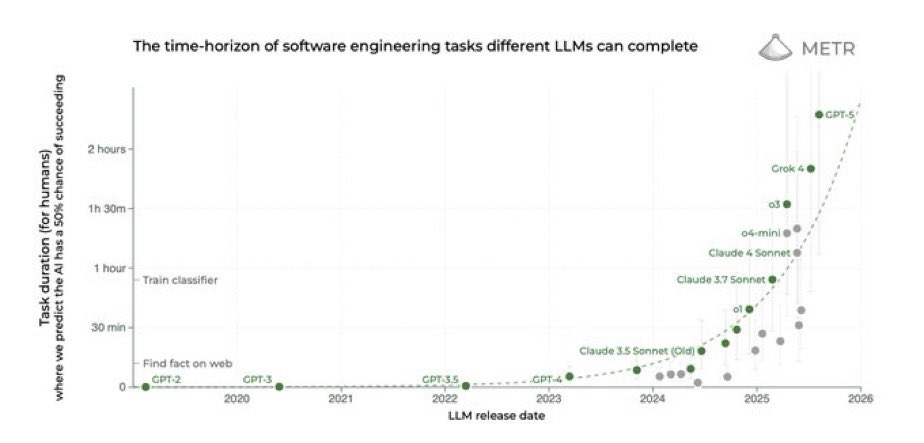

The development of capabilities that we are currently seeing seems to follow an exponential curve.

Nobody knows how much compute we really need to achieve AGI.

We may get to that point in five years, in ten, twenty or thirty years.

__________________________

🔮The most controversial part of the book. The forecast:

The book paints the extinction of humanity in less than 20 years as plausible if we don't stop AI development.

Whether you believe the figure or not, the timelines of many researchers have slipped significantly in recent years.

The AI Impacts Surveys (e.g. 2016, 2022, 2023) have surveyed AI researchers worldwide, especially those who publish at major conferences such as NeurIPS, ICML or AAAI.

The surveys show a clear trend towards shorter AGI timelines and significant p-doom values. (p-doom refers to the risk of humanity being wiped out by artificial intelligence).

⏱️AGI/HLMI timing (50% chance that AI will perform all tasks better and cheaper than humans - "high-level machine intelligence"):

- 2016: ~2061

- 2022: ~2059

- 2023 (published 2024): 2047

The jump 2022 → 2023 was -13 years. 🫨

💣p(doom) - "extremely poor outcomes"

- 2022: Median 5%, 48% of respondents state >10%.

- 2023 survey: 38-51% indicate >10% for scenarios "as bad as extinction".

Not consensus, but far from zero.

We are talking here about the assessment that there is a probability of around 10% that humanity will be wiped out. 🤯

__________________________

Well, hasn't humanity already survived various asymmetric risks?

What about nuclear weapons, for example? 🤔

Nuclear weapons risks are iterative.

Humanity can narrowly escape several times (as in 1962, 1983, 1995).

AGI, on the other hand, is unique:

When a system with uncontrollable power emerges, there is no turning back and no second chance.

"You only get one shot at aligning superintelligence, and you can't debug it afterwards."

- Eliezer Yudkowsky

This means that the expected value is catastrophically high, even if the probability is "small" (~10% according to leading AI scientists).

Nuclear weapons can kill.

A superhuman AI can decide what exists in the future.

__________________________

Closing words 🔚

There is no question that AI will profoundly change our lives in a short space of time.

In many jobs, the economic value that a person contributes already depends on how well they use AI as a tool.

But how long will it be before almost all jobs are performed by AI without human sensitivity or empathy?

For me, the sentence "I want to be operated by a human" is above all a quality requirement and a question of trust.

AI is new and often not yet reliable enough.

But what if talking AI avatars can no longer be distinguished from humans?

Which company, which state will still be able to afford it? not gradually hand over more responsibility to AI?

Regardless of what you think of Yudkowsky's predictions:

He may sound more dramatic than others, but he hits a sore spot.

We have opened Pandora's box with AI and are relying surprisingly heavily on hope when it comes to AI alignment.

Yudkowsky is not alone in this; the shifts in the AI Impacts surveys show a clear trend.

And now the obligatory question for you:

How do you rate the existential threat posed by AI? 😳